sklearn.feature_selection.f_regression#

- sklearn.feature_selection.f_regression(X, y, *, center=True, force_finite=True)[source]#

Univariate linear regression tests returning F-statistic and p-values.

Quick linear model for testing the effect of a single regressor, sequentially for many regressors.

This is done in 2 steps:

The cross correlation between each regressor and the target is computed using

r_regressionas:E[(X[:, i] - mean(X[:, i])) * (y - mean(y))] / (std(X[:, i]) * std(y))

It is converted to an F score and then to a p-value.

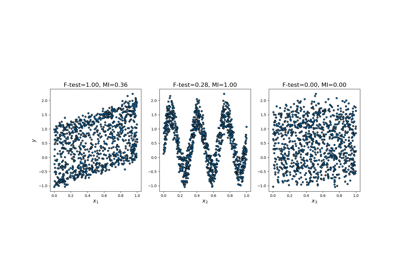

f_regressionis derived fromr_regressionand will rank features in the same order if all the features are positively correlated with the target.Note however that contrary to

f_regression,r_regressionvalues lie in [-1, 1] and can thus be negative.f_regressionis therefore recommended as a feature selection criterion to identify potentially predictive feature for a downstream classifier, irrespective of the sign of the association with the target variable.Furthermore

f_regressionreturns p-values whiler_regressiondoes not.Read more in the User Guide.

- Parameters:

- X{array-like, sparse matrix} of shape (n_samples, n_features)

The data matrix.

- yarray-like of shape (n_samples,)

The target vector.

- centerbool, default=True

Whether or not to center the data matrix

Xand the target vectory. By default,Xandywill be centered.- force_finitebool, default=True

Whether or not to force the F-statistics and associated p-values to be finite. There are two cases where the F-statistic is expected to not be finite:

when the target

yor some features inXare constant. In this case, the Pearson’s R correlation is not defined leading to obtainnp.nanvalues in the F-statistic and p-value. Whenforce_finite=True, the F-statistic is set to0.0and the associated p-value is set to1.0.when a feature in

Xis perfectly correlated (or anti-correlated) with the targety. In this case, the F-statistic is expected to benp.inf. Whenforce_finite=True, the F-statistic is set tonp.finfo(dtype).maxand the associated p-value is set to0.0.

New in version 1.1.

- Returns:

- f_statisticndarray of shape (n_features,)

F-statistic for each feature.

- p_valuesndarray of shape (n_features,)

P-values associated with the F-statistic.

See also

r_regressionPearson’s R between label/feature for regression tasks.

f_classifANOVA F-value between label/feature for classification tasks.

chi2Chi-squared stats of non-negative features for classification tasks.

SelectKBestSelect features based on the k highest scores.

SelectFprSelect features based on a false positive rate test.

SelectFdrSelect features based on an estimated false discovery rate.

SelectFweSelect features based on family-wise error rate.

SelectPercentileSelect features based on percentile of the highest scores.

Examples

>>> from sklearn.datasets import make_regression >>> from sklearn.feature_selection import f_regression >>> X, y = make_regression( ... n_samples=50, n_features=3, n_informative=1, noise=1e-4, random_state=42 ... ) >>> f_statistic, p_values = f_regression(X, y) >>> f_statistic array([1.2...+00, 2.6...+13, 2.6...+00]) >>> p_values array([2.7..., 1.5..., 1.0...])