sklearn.linear_model.enet_path#

- sklearn.linear_model.enet_path(X, y, *, l1_ratio=0.5, eps=0.001, n_alphas=100, alphas=None, precompute='auto', Xy=None, copy_X=True, coef_init=None, verbose=False, return_n_iter=False, positive=False, check_input=True, **params)[source]#

Compute elastic net path with coordinate descent.

The elastic net optimization function varies for mono and multi-outputs.

For mono-output tasks it is:

1 / (2 * n_samples) * ||y - Xw||^2_2 + alpha * l1_ratio * ||w||_1 + 0.5 * alpha * (1 - l1_ratio) * ||w||^2_2

For multi-output tasks it is:

(1 / (2 * n_samples)) * ||Y - XW||_Fro^2 + alpha * l1_ratio * ||W||_21 + 0.5 * alpha * (1 - l1_ratio) * ||W||_Fro^2

Where:

||W||_21 = \sum_i \sqrt{\sum_j w_{ij}^2}

i.e. the sum of norm of each row.

Read more in the User Guide.

- Parameters:

- X{array-like, sparse matrix} of shape (n_samples, n_features)

Training data. Pass directly as Fortran-contiguous data to avoid unnecessary memory duplication. If

yis mono-output thenXcan be sparse.- y{array-like, sparse matrix} of shape (n_samples,) or (n_samples, n_targets)

Target values.

- l1_ratiofloat, default=0.5

Number between 0 and 1 passed to elastic net (scaling between l1 and l2 penalties).

l1_ratio=1corresponds to the Lasso.- epsfloat, default=1e-3

Length of the path.

eps=1e-3means thatalpha_min / alpha_max = 1e-3.- n_alphasint, default=100

Number of alphas along the regularization path.

- alphasarray-like, default=None

List of alphas where to compute the models. If None alphas are set automatically.

- precompute‘auto’, bool or array-like of shape (n_features, n_features), default=’auto’

Whether to use a precomputed Gram matrix to speed up calculations. If set to

'auto'let us decide. The Gram matrix can also be passed as argument.- Xyarray-like of shape (n_features,) or (n_features, n_targets), default=None

Xy = np.dot(X.T, y) that can be precomputed. It is useful only when the Gram matrix is precomputed.

- copy_Xbool, default=True

If

True, X will be copied; else, it may be overwritten.- coef_initarray-like of shape (n_features, ), default=None

The initial values of the coefficients.

- verbosebool or int, default=False

Amount of verbosity.

- return_n_iterbool, default=False

Whether to return the number of iterations or not.

- positivebool, default=False

If set to True, forces coefficients to be positive. (Only allowed when

y.ndim == 1).- check_inputbool, default=True

If set to False, the input validation checks are skipped (including the Gram matrix when provided). It is assumed that they are handled by the caller.

- **paramskwargs

Keyword arguments passed to the coordinate descent solver.

- Returns:

- alphasndarray of shape (n_alphas,)

The alphas along the path where models are computed.

- coefsndarray of shape (n_features, n_alphas) or (n_targets, n_features, n_alphas)

Coefficients along the path.

- dual_gapsndarray of shape (n_alphas,)

The dual gaps at the end of the optimization for each alpha.

- n_iterslist of int

The number of iterations taken by the coordinate descent optimizer to reach the specified tolerance for each alpha. (Is returned when

return_n_iteris set to True).

See also

MultiTaskElasticNetMulti-task ElasticNet model trained with L1/L2 mixed-norm as regularizer.

MultiTaskElasticNetCVMulti-task L1/L2 ElasticNet with built-in cross-validation.

ElasticNetLinear regression with combined L1 and L2 priors as regularizer.

ElasticNetCVElastic Net model with iterative fitting along a regularization path.

Notes

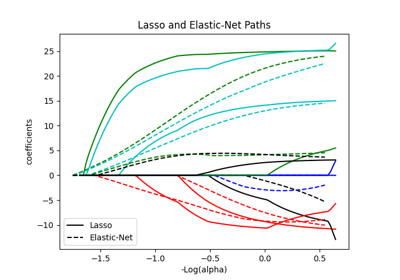

For an example, see examples/linear_model/plot_lasso_coordinate_descent_path.py.