sklearn.metrics.mean_squared_error#

- sklearn.metrics.mean_squared_error(y_true, y_pred, *, sample_weight=None, multioutput='uniform_average', squared='deprecated')[source]#

Mean squared error regression loss.

Read more in the User Guide.

- Parameters:

- y_truearray-like of shape (n_samples,) or (n_samples, n_outputs)

Ground truth (correct) target values.

- y_predarray-like of shape (n_samples,) or (n_samples, n_outputs)

Estimated target values.

- sample_weightarray-like of shape (n_samples,), default=None

Sample weights.

- multioutput{‘raw_values’, ‘uniform_average’} or array-like of shape (n_outputs,), default=’uniform_average’

Defines aggregating of multiple output values. Array-like value defines weights used to average errors.

- ‘raw_values’ :

Returns a full set of errors in case of multioutput input.

- ‘uniform_average’ :

Errors of all outputs are averaged with uniform weight.

- squaredbool, default=True

If True returns MSE value, if False returns RMSE value.

Deprecated since version 1.4:

squaredis deprecated in 1.4 and will be removed in 1.6. Useroot_mean_squared_errorinstead to calculate the root mean squared error.

- Returns:

- lossfloat or ndarray of floats

A non-negative floating point value (the best value is 0.0), or an array of floating point values, one for each individual target.

Examples

>>> from sklearn.metrics import mean_squared_error >>> y_true = [3, -0.5, 2, 7] >>> y_pred = [2.5, 0.0, 2, 8] >>> mean_squared_error(y_true, y_pred) 0.375 >>> y_true = [[0.5, 1],[-1, 1],[7, -6]] >>> y_pred = [[0, 2],[-1, 2],[8, -5]] >>> mean_squared_error(y_true, y_pred) 0.708... >>> mean_squared_error(y_true, y_pred, multioutput='raw_values') array([0.41666667, 1. ]) >>> mean_squared_error(y_true, y_pred, multioutput=[0.3, 0.7]) 0.825...

Examples using sklearn.metrics.mean_squared_error#

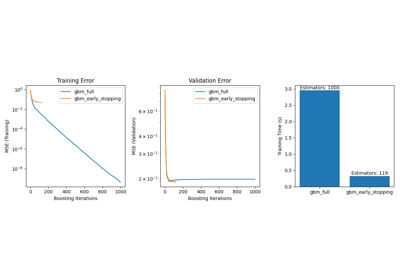

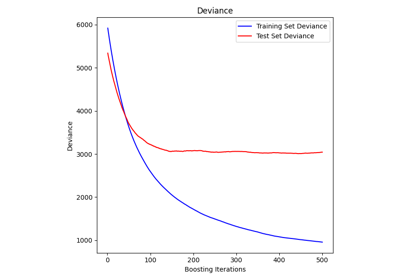

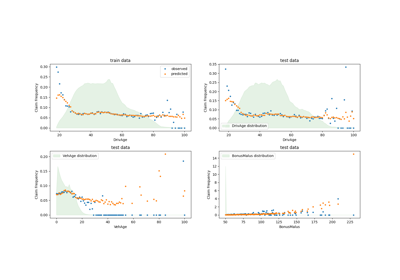

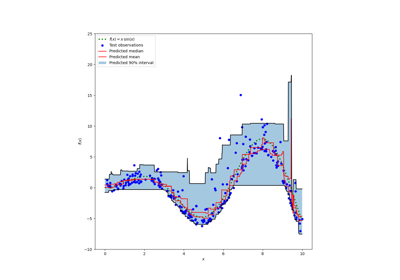

Prediction Intervals for Gradient Boosting Regression

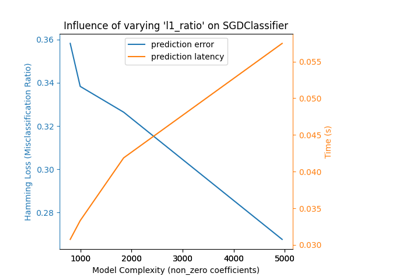

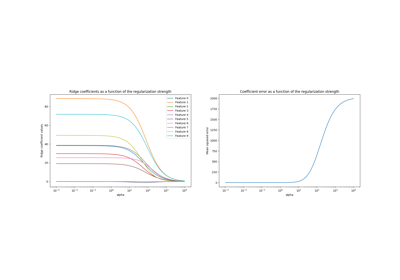

Ridge coefficients as a function of the L2 Regularization